|

| By Karen Riccio |

AI is destined to become the most impactful technology since the advent of the internet. But it’s dead in the water without enough key resources.

As a result, AI is also proving to be challenging to implement.

Early on, we discovered that super chips and GPU servers were key to furthering the development of AI.

Then we learned that many data centers charged with housing these massive servers lacked adequate infrastructure to support AI.

That would also be the case if data centers — including those owned by hyperscalers like Microsoft (MSFT), Amazon.com (AMZN) and Alphabet/Google (GOOGL) — can’t generate enough electricity to power these energy-intensive servers.

Relying on the crippled U.S. grid that is more than a century old, isn’t even an option.

Even before the emergence of AI, environmentalists called data centers “energy hogs,” consuming between 1% and 2% of the world’s power, according to the International Energy Agency.

It’s such a high priority that a trend toward reopening nuclear power plants is well underway.

I just wrote about Microsoft partnering with the owner of Three Mile Island to reopen the site of one of the biggest disasters in U.S. history and buy 20 years’ worth of energy generated by the plant.

I’ll have more on this extreme challenge in a moment.

More recently, the challenges of keeping all those servers cool came to light.

To prevent the overheating (a.k.a. melting) of GPUs, temperatures must be kept between 64.4°F to 71.6°F 24 hours a day, seven days a week.

Water is key for accomplishing this. It transports the heat generated in the data centers into cooling towers to help it escape the building, similar to how the human body uses sweat to keep cool.

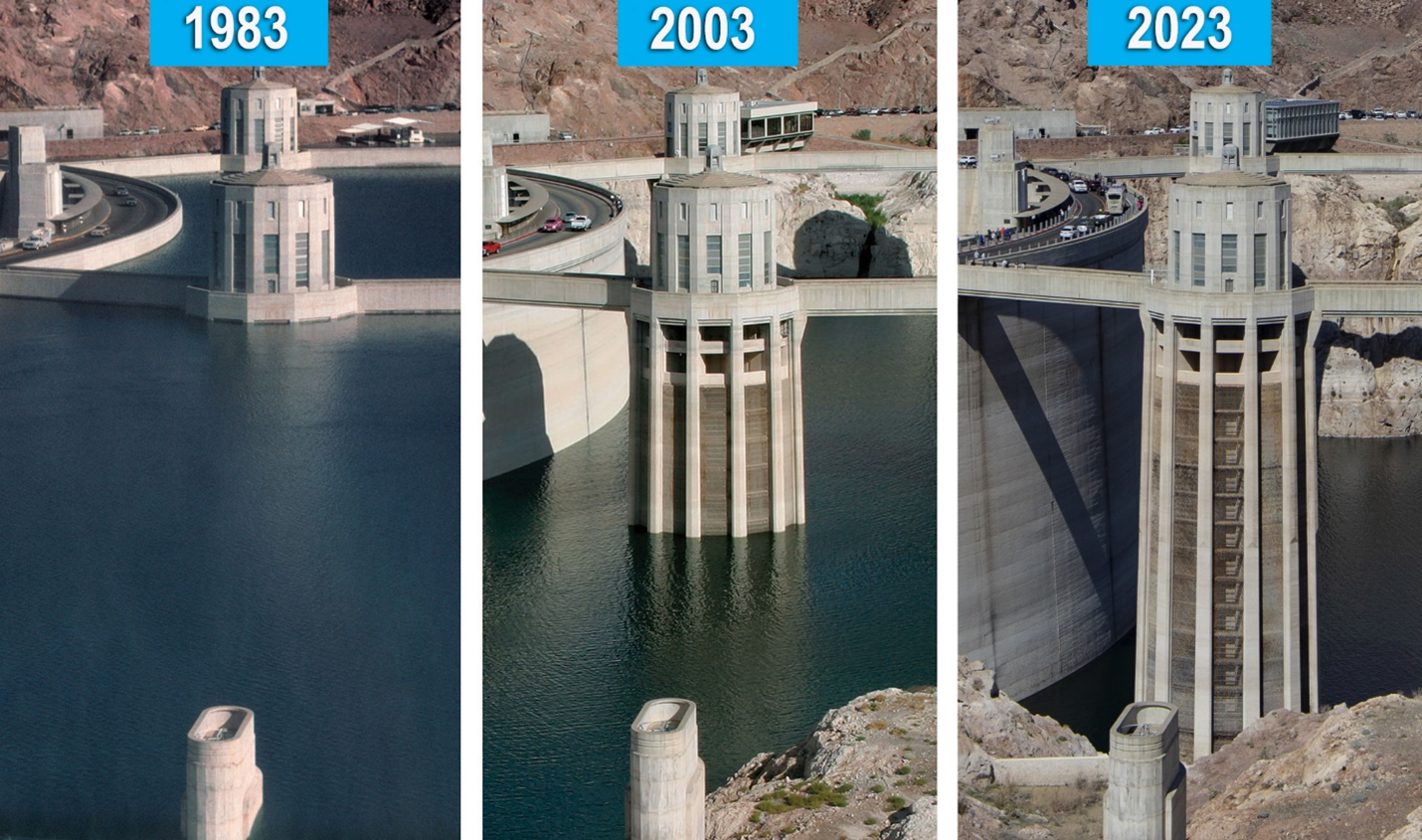

This photograph shows how low water levels have reached in Hoover Dam, mirroring scarcity throughout the U.S.

A common and increasingly popular strategy in data centers involves liquid cooling … or even the actual submerging of servers in water.

It’s one of the most efficient cooling technologies on the market. If a bit more difficult than traditional air-cooling methods.

Still, water is a finite resource. And demand for it is growing.

I mean, an average non-AI data center already uses 3 to 5 million gallons of water a day — equal to how much water a city with up to 50,000 people consumes.

But when you consider the more than 100 active users of ChatGPT to date — along with the introduction of GPT-4 — the water consumption resulting from day-to-day usage is even more staggering.

Get this: A single question asked of ChatGPT by one person results in the consumption of two 8-ounce glasses of water from a data center’s off-site storage.

On top of that, GPT-4 is almost 10 times as large as GPT-3, possibly increasing the water consumption by multiple folds.

Meanwhile, training a single AI model like GPT-3 could require as much as 700,000 liters of water, a figure that rivals the annual water consumption of several households.

Microsoft,with 200 data centers across the globe, recently disclosed that its global water consumption spiked 34% from 2021 to 2022 (to nearly 1.7 billion gallons, or more than 2,500 Olympic-sized swimming pools), a sharp increase compared to previous years that outside researchers tie to its AI research.

So, without additional water sources or other ways to keep equipment cool, AI doesn’t stand a chance to develop past infancy apps like ChatGPT. That would be a big disappointment considering its potential.

As a result, companies — private and public — are scrambling to innovate. That means even more investment opportunities to profit from AI outside of the Magnificent 7 stocks.

Because climates differ in locations here on Earth and on solid ground, data center owners often build or relocate to regions with cooler year-round temperatures.

This requires less power and water, and supplies of both are often cheaper.

Data centers also target cities conducive to alternative energy resources near rivers, solar or wind farms. Open land near utilities is also in high demand.

Today, though, they’re venturing where others have never gone before — underwater and, eventually, even outer space.

For this article, I’ll stick with the most feasible and actionable place: Under the seas.

In fact, thousands of servers, housed in underwater data centers, have been submerged into the Pacific Ocean and Atlantic Ocean.

The concept behind underwater data centers is simple enough: You take IT infrastructure (like servers), install them in a water-tight vessel and then submerge it under the water.

This is precisely what Microsoft did with Project Natick, an underwater data center that it first launched in 2015 off the coast of California, then off the coast of Scotland in 2018.

It was set 117 feet deep on the seafloor off Scotland's coast for two years and housed 864 servers in a vessel approximately 40-feet long and 9 feet in diameter with a low-pressure, dry nitrogen environment.

Microsoft ended the project in 2020. However, the company said its underwater data centers were, “reliable, practical and used energy sustainably.”

The successful undertaking caught the attention of others …

Privately owned Subsea Cloud designs and deploys commercially available underwater data centers. It uses non-pressurized “pods” for its servers. They contain liquid to protect the technology inside.

Subsea Cloud claims its pods can withstand a depth up to 3,000 feet. The pods use passive cooling methods, which reduce their carbon footprint and impact on the surrounding environment.

In 2023, Highlander, a company that specializes in underwater data centers, launched a commercial facility in the water near Hainan, China.

The data center weighs about 1,433 tons and is about 115 feet underwater. The company plans to deploy 100 more modules in the near future to reduce land, fresh water and electricity use.

These modules weigh 1,300 tons each and serve both as data repositories and operate as supercomputers.

Each can process over 4 million high-definition photos in 30 seconds and uses seawater as a natural cooling source to increase its overall energy efficiency by 40 to 60%.

Placing servers and data centers beneath the sea has many benefits (including no personnel) other than needing less water and power.

Because these underwater containers provide an atmosphere of dry nitrogen, that means none of the equipment is exposed to oxygen, a far more corrosive element than nitrogen. Humidity is also lower, reducing further risk of corrosion and extending the life of servers.

This may seem like a far-fetched approach. But I think it emphasizes how far — or in this case how deep — companies are willing to go to prevent the arrested development of AI and other power- and water-hungry technologies.

Besides, with oceans covering about 70% of the Earth's surface, there is no lack of real estate for deploying underwater data centers.

Whether underwater data centers gain traction has yet to be seen. But you can be certain that the multi-year boom in digital infrastructure will continue as companies continue to look outside the box to ensure that AI’s development stretches far beyond ChatGPT.

This topic is so vast and important that I can’t fit it all into this article. Fortunately, our AI expert has been on this case for a while.

He just put the finishing touches on a new presentation he’s calling “Beyond AI.” I urge you to check it — and the high-flying investments he found — by clicking here.

Best,

Karen Riccio